At Button, our software is integrated into apps and websites with millions and millions of daily active users. Our goal is to make it easy to get Button integrated into your app, but that means that we can see additional bursts of traffic as new partners introduce us into their applications and websites. It's not uncommon for us to see certain pages on an integrated website go viral and receive a huge influx of traffic. In those situations we need to ensure that one particular integration can't hog up all of our server capacity and get in the way of our other partners.

While this problem can be (and is) attacked from many different angles, the one I'd like to focus on in this post is the concept of Rate Limiting. Simply put, rate limiting is the concept of restricting the rate of traffic flowing through a system to ensure it does not exceed the desired capacity. There are many great resources on rate limiting and the various ways it can be implemented (for example), but we'll be focusing on the tool we built here at Button: divvy.

We built divvy with a specific set of design goals in mind:

With these goals in mind, we broke ground on implementation. At a high level, divvy is implemented as both a server and a client (we also have a client implementation in Go that is not yet open-sourced).

The server is implemented in Node and is backed by Redis, with configuration defined in INI format. An example configuration might look like this:

[method=GET path=/pantry/* ip=*]

creditLimit = 1

resetSeconds = 3600

actorField = ip&

comment = '1 request per hour for GET /pantry/*, by IP'

[method=GET path=/crisper/carrots userId=*]

creditLimit = 10

resetSeconds = 60

actorField = userId

comment = '10 req/minute for GET /crisper/carrots, by user'

[default]

creditLimit = 0

resetSeconds = 0

comment = 'Default deny!'

This example touches on a few key features that we should dig into.

Actors

Rate limiting in divvy is done at the "actor" level, which means that for every request we feed into divvy we want to extract the "who" associated with it, whether that's a user ID, email address, IP, hostname, or what have you. Actors are non-prescriptive by design; you have a much better idea of who should be rate-limited than divvy does. In this example, we have two types of actors: IP and User ID. These are not special in any way, and can be thought of as interfaces the client's payload must provide to trigger this rule. For example, a common actor we limit by at Button is the requesting "organization".

Rule Evaluation

Rules are considered in order from top to bottom, with the first matching rule being used. The default rule at the end is a catch-all, but still just a regular rule. For example, let's be more considerate and allow limited access rather than a blanket denial:

[default]

creditLimit = 10

resetSeconds = 60

actorField = ip

comment = '10 requests per minute by default

In concert with what we know about actors, we can also allow certain clients increased access if we need to, by putting a more specific rule first:

# This user needs more than 10 req/s

[method=GET path=/crisper/carrots userId=10]

creditLimit = 100

resetSeconds = 60

comment = '100 req/minute for GET /crisper/carrots for user 10' Everyone else gets the standard

[method=GET path=/crisper/carrots userId=*]

creditLimit = 10

resetSeconds = 60

actorField = userId

comment = '10 req/minute for GET /crisper/carrots, by user'

Additionally, divvy will raise an error if you define a rule that cannot be reached. If we flipped the order of the two rules above our custom rate limit could never be reached, which would cause divvy to reject the configuration. This extra layer of validation can be helpful with more complex configurations.

The divvy server evaluates the provided configuration file at startup and then begins accepting TCP connections from clients. At Button we're using divvy in a few places throughout our service architecture, from our API front door to some internal workers that we want to restrict from sending notifications too often. These different use cases can happily co-exist within a single divvy configuration, or be split out and provided to separate divvy server instances. For example:

[method=GET path=/birthday-cake userId=*]

creditLimit = 1

resetSeconds = 31556926

actorField = userId

comment = 'Everyone gets one birthday cake per year'

[worker=card-sender cardType=birthday userId=*]

creditLimit = 1

resetSeconds = 31556926

actorField = userId

comment = 'Everyone gets one birthday card per year'

Buckets and Windows

As divvy fields inbound hits, it will automatically create buckets as defined by the configured actorField. These buckets will continue to decrement with each additional hit until they reach 0 credits, and the window will reset once the configured number of seconds (resetSeconds) has passed. Underneath it all, each bucket is represented as an expiring key in Redis, with the TTL set to match the bucket's resetSeconds. This provides a very lightweight but robust approach for automatically expiring individual rate limit windows without any additional work needing to be performed by the server.

The divvy protocol is a line-oriented "chat" protocol over a long-lived TCP connection. Clients send requests and receive responses, with consistent ordering guaranteed by the server. A request starts with a command, such as HIT, which comes along with an arbitrary number of key/value pairs describing the action being taken, to be used when matching against defined rules. A series of HIT requests leading to a rate limit being triggered might look like so:

HIT method=GET path=/pantry/cookies ip=192.168.1.1

OK true 2 3600

HIT method=GET path=/pantry/cookies ip=192.168.1.1

OK true 1 3599

HIT method=GET path=/pantry/cookies ip=192.168.1.1

OK true 0 3598

HIT method=GET path=/pantry/cookies ip=192.168.1.1

OK false 0 3597

Note that the divvy server sends us back all of the information we need if we want to provide helpful information to our users, in the form of how many requests they have remaining, and how long until the current rate limiting window is reset. Using the Node client is a little bit easier:

const DivvyClient = require('@button/divvy-client');

const client = new DivvyClient('localhost', 8321);

const result = await client.hit({

method: 'GET',

path: '/pantry/cookies'

}); console.log(result);

// { allowed: true, currentCredit: 2, nextResetSeconds: 3600 };

We built divvy to solve the issues we were seeing, and we hope that it can be useful to you as well. The loose coupling of the client and server can be a boon for easily applying additional rate limits, but can also lead to some additional context switching while building a new API.

When we first built divvy, we were primarily writing services using NodeJS, and the choice of technology here reflects our ecosystem and tooling at the time. As a protocol, we may determine in the future that we would get better performance or benefits from type safety in a different language, such as Go. The divvy protocol is designed to be language-agnostic and lends itself easily to server and client implementations in many different languages.

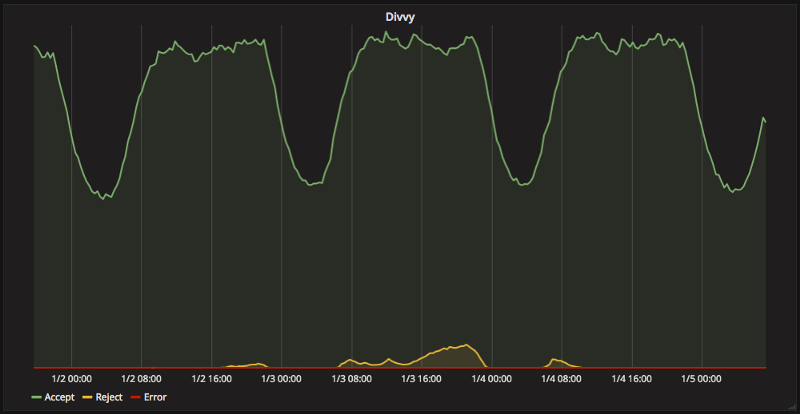

We've been running divvy in production for over a year now without issue, with it catching numerous traffic bursts and preventing these spikes from affecting our internal systems and keeping our business chugging along.

If you're interested in helping us improve it, feel free to check out the source code on Github:

Of course, if you'd like to help us build entirely different things, we're always looking for great engineers.